Generative AI has immense potential to take chatbots to the next level by enabling more natural, human-like conversations. Digital humans have been around for years, but previous limitations in language understanding and reasoning restricted their abilities. Now, with advances in natural language processing fueled by large language models, chatbots can finally tap into the expansive knowledge and nuanced communication style of their human trainers. Customers will feel understood and have their needs efficiently addressed through natural dialogues with digital agents. The future capabilities of generative AI promise to uncover new possibilities for deeply personalized, satisfying exchanges between customers and automated assistants.

In this workshop, we will learn to do the following:

- Ingest data from various data sources and store in Amazon Kendra (for our PoC, we’ll leverage Amazon service FAQ docs and build a customer service assistant)

- Build a Lex Chatbot that leverages built-in intents

- Augment your chatbot with Generative AI, specifically Anthropic’s Claude V2 on Amazon Bedrock

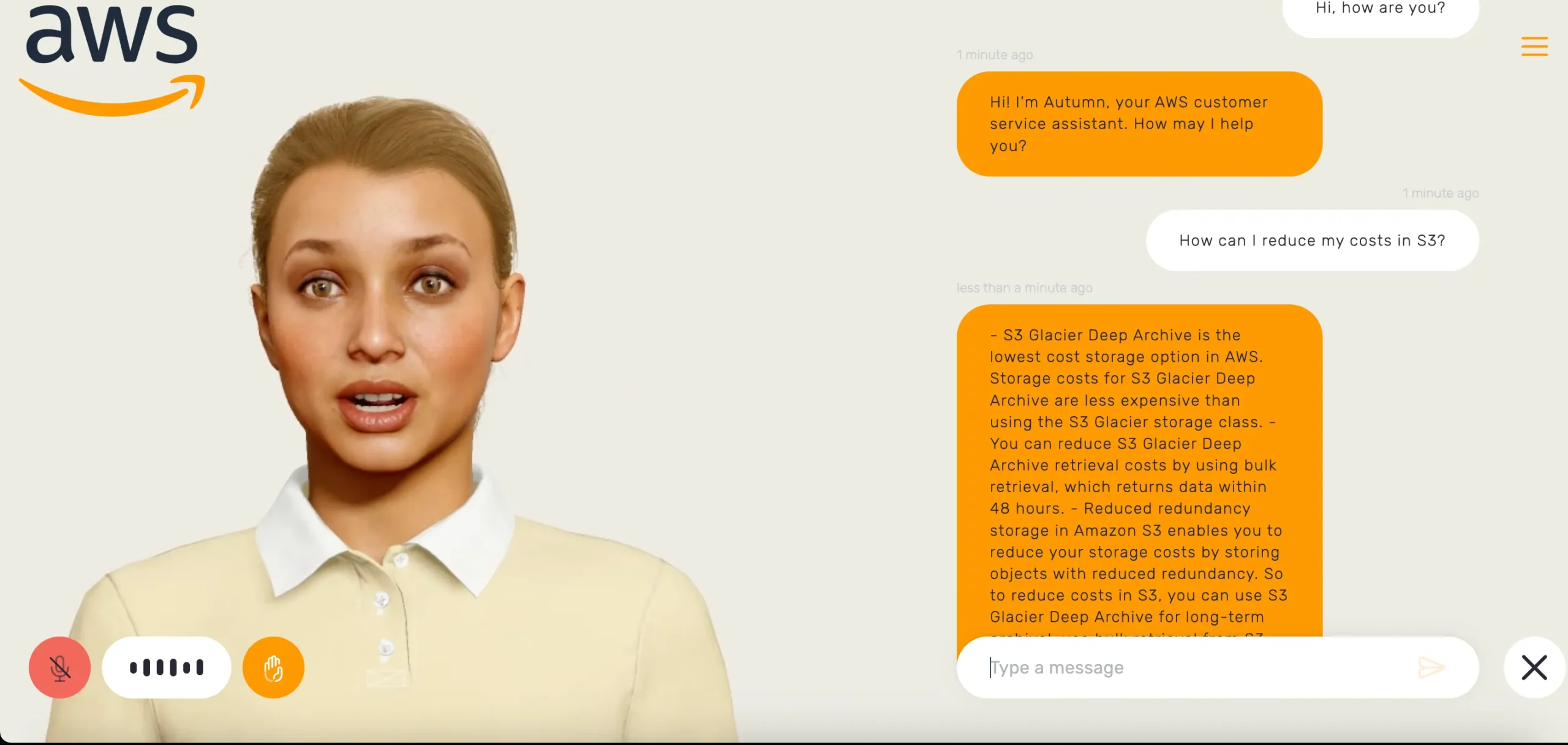

- Create a digital human front-end for your Lex Chatbot

Solution

This project uses the following AWS services:

- Amazon Lex: To build our chatbot functionality

- AWS Lambda: Used to call Bedrock from our Lex Chatbot Intents

- Amazon Bedrock: We will use Anthropic Claude hosted on Amazon Bedrock. To augment our bot with Kendra, we’ll use the LangChain Retrieval orchestration library.

- Amazon Kendra: We’ll create a Kendra Index that has SageMaker FAQs to emulate a customer service agent for AWS.

- Soul Machines: FrontEnd Digital Person for our application

Creating your Lex Bot

Amazon Lex is a service for building conversational interfaces into applications using voice and text. It allows developers to create chatbots and other types of conversational AI that can understand natural language, convert speech to text, and interact with users.

Lex has a few key components to it:

- Intents: An intent represents an action that the user wants to perform or get from the chatbot. Intents categorize the user’s intent and map it to desired outcomes.

- Slots: Slots are parameters for intents. They represent variable pieces of data that need to be collected from the user in order to fulfill an intent.

- Utterances: Sample spoken or written phrases that map to intents. Lex uses them to train how to recognize an intent from user input.

- Bot: The chatbot itself that is configured with intents, slots, etc and can have conversational dialogs with users.

- Lambda functions: Logic that is invoked by the Lex bot to fulfill intents. This can involve calling APIs, querying data, calculations, etc. We’ll create a Lambda function to make API requests to Anthropic Claude on Amazon Bedrock.

For our digital human, we’ll create two Lex Intents:

- WelcomeIntent: Basic intent to introduce digital human

- FallbackIntent: This takes the user’s query and inputs it into our LLM (Claude V2), and receives a response fit for a chatbot. This is the core functionality of our digital human.

Now, let’s get into the console and create the WelcomeIntent.

- Create a Lex Bot with basic permissions, and keep all settings as default.

- Name your first intent WelcomeIntent, then scroll down to utterances and add some samples that the user might start with:

hello

hi

how are you

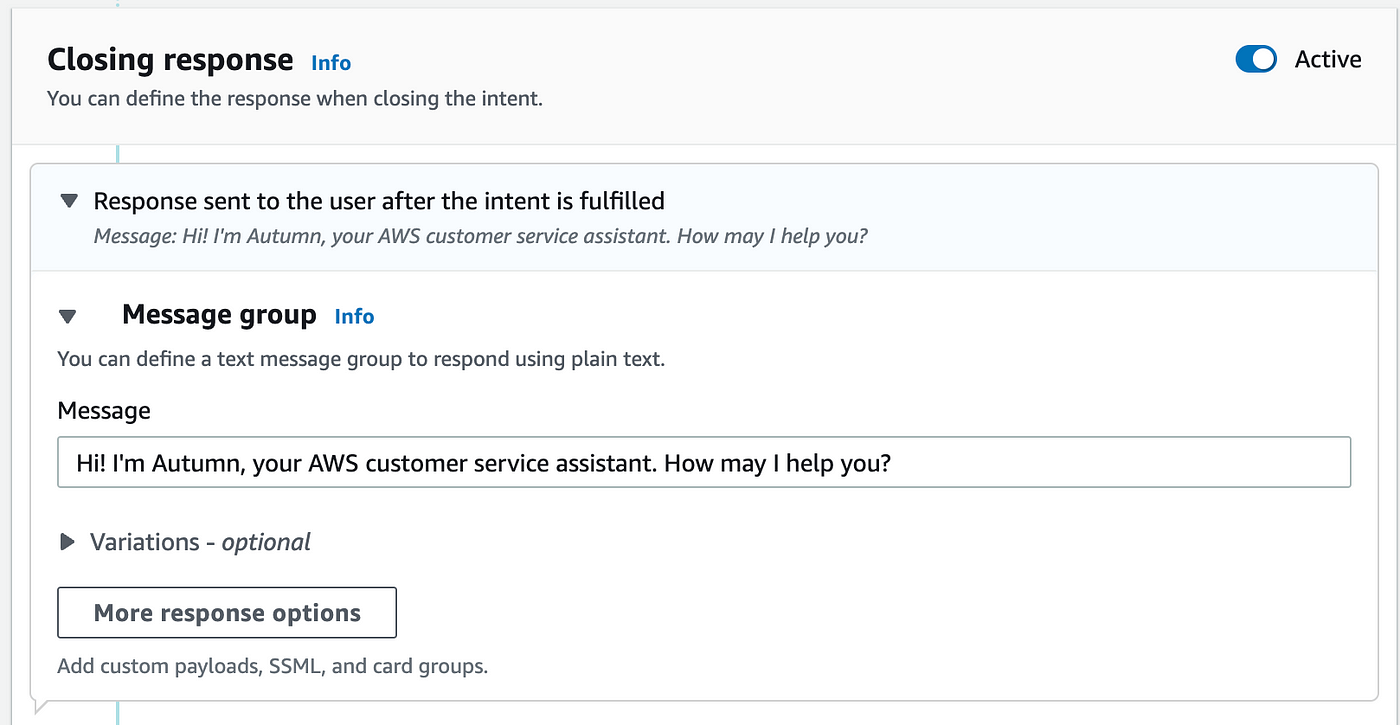

3. In the closing response, add a message that your digital human can say when the user speaks one of these utterances. I’m naming my customer service assistant Autumn because of the beautiful foliage I see out my window. Click SaveIntent and then the Build button.

Hit Test, and try out one of your utterances. You’ve made your first intent!

Creating a Kendra Index for your docs

Amazon Kendra is an intelligent enterprise search service that helps you search across different content repositories with built-in connectors. Let’s create a Kendra index that uses sample AWS docs as our data source.

- Go the Amazon Kendra page and create a Kendra index with basic permission. This should take a few minutes to provision.

- Hit Data Sources and choose Sample AWS Documentation. Create a name for this data source, and add it to your Kendra index. We’ll use LangChain in the next section to augment our LLM’s input with our documentation.

Using AWS Lambda for Generative AI

Now, let’s create our FallbackIntent. This intent gets invoke whenever our user inputs anything other than a WelcomeIntent utterance. Instead of hard-coding a response, our intent will use a lambda function to call our LLM (Anthropic Claude hosted using Amazon Bedrock).

- Go to AWS Lambda in the AWS console and create a lambda function. Use the latest version of Python as your runtime.

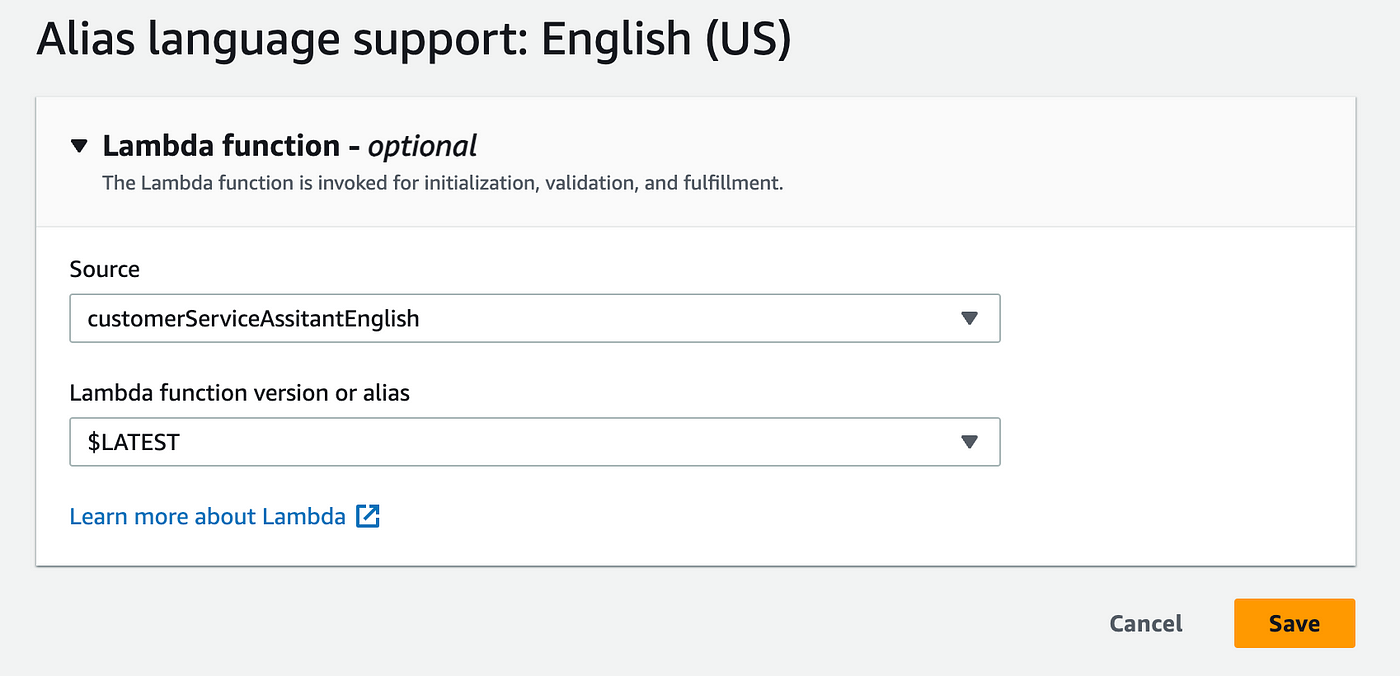

- Back in the Lex console, choose the Aliases section under Deployment for your Lex bot. Select TestBotAlias, scroll down to Languages and click on English. Note: Aliases point to specific versions of your bot, and you can use your alias to update the bot version that is used. Under Source, select the latest version of your Lambda function and hit Save.

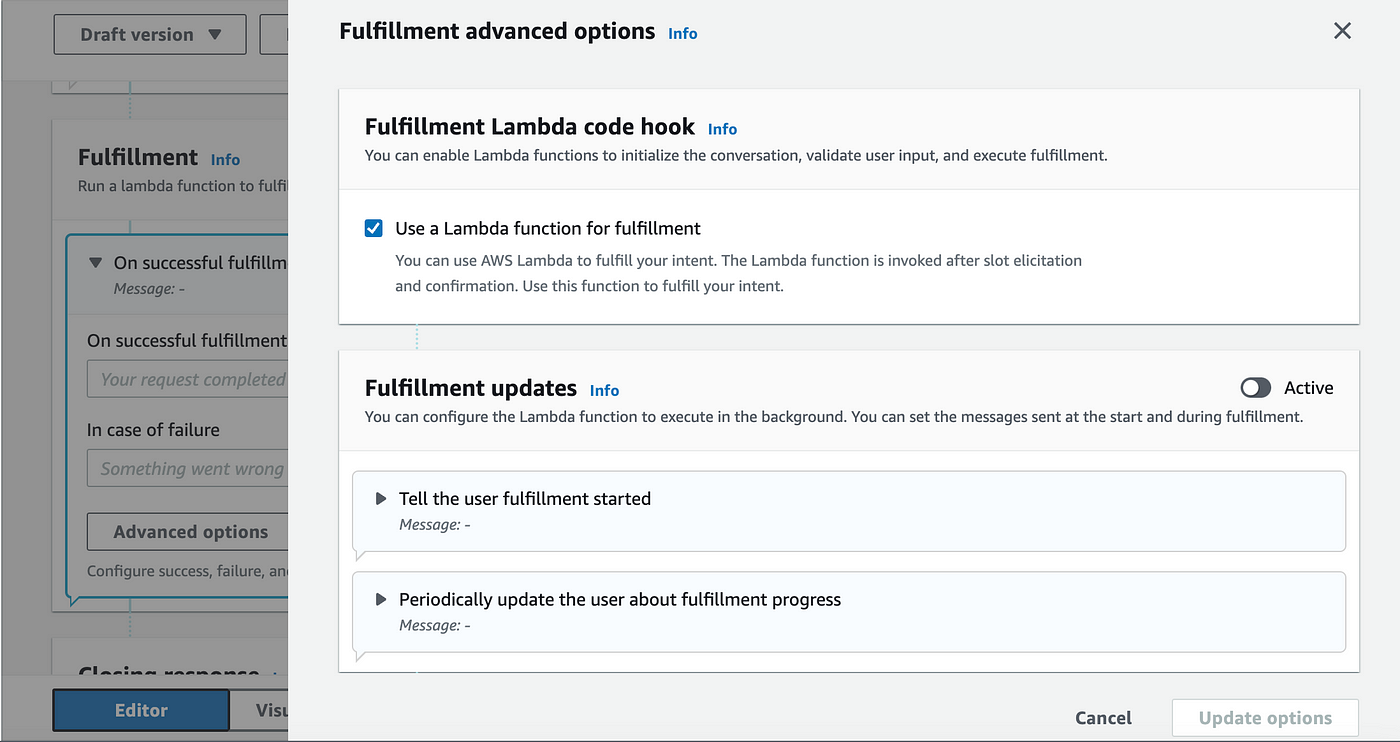

3. FallbackIntent is a default intent created by Lex. In the intent, click on Advanced options and check Use a Lambda function for fulfillment. Now, whenever FallbackIntent is invoked, the event data is sent to the lambda function above.

It’s time for our favorite part. The code.

4. In your lambda function, let’s import boto3 (Python SDK to use AWS APIs) and LangChain as our orchestrator for Retrieval Augmented Generation (RAG). If you’re not familiar with RAG, see my previous article.

import json, boto3

from langchain.llms.bedrock import Bedrock

from langchain.chains import RetrievalQA

from langchain.retrievers import AmazonKendraRetriever

from langchain.prompts import PromptTemplateNOTE: You will need to import LangChain as a Lambda Layer. These instructions using CodeBuild worked for me.

5. When using a Lambda orchestrator with Lex, Lex not only sends a JSON input event to Lambda, but also expects your lambda function to return a JSON response. These functions will be used as helpers for our lambda handler to retrieve/send attributes in JSON.

def get_slots(intent_request):

return intent_request['sessionState']['intent']['slots']

def get_slot(intent_request, slotName):

slots = get_slots(intent_request)

if slots is not None and slotName in slots and slots[slotName] is not None:

return slots[slotName]['value']['interpretedValue']

else:

return None

def get_session_attributes(intent_request):

sessionState = intent_request['sessionState']

if 'sessionAttributes' in sessionState:

return sessionState['sessionAttributes']

return {}

def elicit_intent(intent_request, session_attributes, message):

return {

'sessionState': {

'dialogAction': {

'type': 'ElicitIntent'

},

'sessionAttributes': session_attributes

},

'messages': [ message ] if message != None else None,

'requestAttributes': intent_request['requestAttributes'] if 'requestAttributes' in intent_request else None

}

def close(intent_request, session_attributes, fulfillment_state, message):

intent_request['sessionState']['intent']['state'] = fulfillment_state

return {

'sessionState': {

'sessionAttributes': session_attributes,

'dialogAction': {

'type': 'Close'

},

'intent': intent_request['sessionState']['intent']

},

'messages': [message],

'sessionId': intent_request['sessionId'],

'requestAttributes': intent_request['requestAttributes'] if 'requestAttributes' in intent_request else None

}

6. By default, you should have a lambda_handler function. This gets called on invocation. Here we’re saying, If the user triggers FallBackIntent, run a function called FallbackIntent.

def dispatch(intent_request):

intent_name = intent_request['sessionState']['intent']['name']

response = None

if intent_name == 'FallbackIntent':

return FallbackIntent(intent_request)

raise Exception('Intent with name ' + intent_name + ' not supported')

def lambda_handler(event, context):

response = dispatch(event)

return response

7. Let’s build out this function. In Lex’s input event, the inputTranscript attribute is the user’s query. So, let’s pass that into a helper function that calls Bedrock and our Kendra index.

def FallbackIntent(intent_request):

session_attributes = get_session_attributes(intent_request)

query = intent_request["inputTranscript"]

# call function to build LLM chain using LangChain

qa = build_chain()

text = qa({"query":query})['result']

message = {

'contentType': 'PlainText',

'content': text

}

fulfillment_state = "Fulfilled"

return close(intent_request, session_attributes, fulfillment_state, message)

8. Finally, let’s create the build_chain function. First, let’s import the bedrock client using boto3, and then our LLM using LangChain and our client.

def build_chain():

bedrock = boto3.client(service_name='bedrock-runtime',region_name='us-east-1',endpoint_url='https://bedrock-runtime.us-east-1.amazonaws.com/')

llm = Bedrock(model_id="anthropic.claude-v2", client=bedrock, model_kwargs={'max_tokens_to_sample':200})

retriever = AmazonKendraRetriever(index_id="<kendra-index-id>",region_name="<kendra-index-region>")

# Prompt template for Anthropic Claude V2 on Bedrock

prompt_template = """

Human: You are a chatbot for prospective AWS users.

Use the following pieces of context to provide a concise answer to the question at the end. If you don't know the answer, just say that you don't know, don't try to make up an answer.

<context>

{context}

</context

Question: {question}

Assistant:

Based on the content: """

PROMPT = PromptTemplate(

template=prompt_template, input_variables=["context", "question"]

)

qa = RetrievalQA.from_chain_type(

llm=llm,

chain_type="stuff",

retriever=retriever,

chain_type_kwargs={"prompt": PROMPT}

)

return qa

As you can see above, we’ve passed in a prompt template that tailors the output to be user-friendly. All Amazon Bedrock customers

consuming Anthropic’s Claude to send prompts to the model in the following syntax: “\n\nHuman: <Customer Prompt>\n\nAssistant:”.

To equip our assistant with a knowledge base, we use a Kendra retriever for the Kendra index that we created. This retriever passes relevant documents to the user’s query as context in our prompt template, and creates an LLM chain.

9. Save and Deploy your lambda function. We’ve completed the back-end for our Lex chatbot!

Create a Digital Person Front-End using Soul Machines

Soul Machines is a paid service, but if your organization has a subscription, you can start using it. Use the following documentation to connect your Lex chatbot to your Soul Machines digital person.

Conclusion

A digital personal assistant can significantly boost efficiency by automating routine tasks, allowing individuals to focus on more important and creative aspects of their work or personal lives. Here, we create a customer service agent, but the use cases for a life-like human interacting with an end user are, well, endless.

Leave a Reply